Qwen2.5-Omni unveiled – a new flagship end-to-end multimodal model in the Qwen series. Designed for comprehensive multimodal perception, it seamlessly processes diverse inputs including text, images, audio, and video, while delivering real-time streaming responses through both text generation and natural speech synthesis. The Qwen2.5-Omni-7B model is available for trial on Qwen Chat and is openly available on Hugging Face, ModelScope, DashScope, and GitHub, with technical documentation in their Paper.

Qwen2.5-Omni Key Features and Innovations

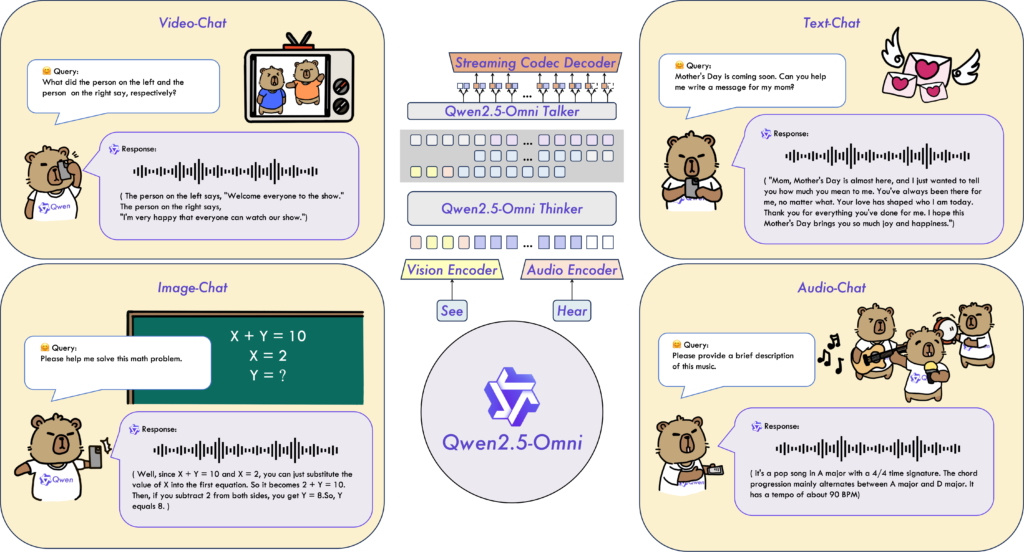

- Omni and Novel Architecture: Thinker-Talker: Qwen2.5-Omni employs a novel Thinker-Talker architecture which is an end-to-end multimodal model designed to perceive diverse modalities, including text, images, audio, and video, while simultaneously generating text and natural speech responses in a streaming manner. The “Thinker” acts as a brain, processing and understanding multimodal inputs and generating text representations. The “Talker” operates as a human mouth, converting these representations into natural speech in real-time.

- TMRoPE (Time-aligned Multimodal RoPE): A novel position embedding technique designed to synchronize the timestamps of video inputs with audio, crucial for accurate audiovisual processing.

- Real-Time Voice and Video Chat: The architecture is specifically designed for fully real-time interactions, supporting chunked input and immediate output.

- Natural and Robust Speech Generation: The model surpasses many existing streaming and non-streaming alternatives, demonstrating superior robustness and naturalness in speech generation.

- Strong Performance Across Modalities: The model exhibits exceptional performance across all modalities when benchmarked against similarly sized single-modality models. Qwen2.5-Omni outperforms the similarly sized Qwen2-Audio in audio capabilities and achieves comparable performance to Qwen2.5-VL-7B.

- Excellent End-to-End Speech Instruction Following: The model performs exceptionally well on end-to-end speech instruction following tasks, achieving results that rival its effectiveness with text inputs. This is evidenced by benchmarks such as MMLU and GSM8K.

Qwen2.5-Omni Architecture Details

The Qwen2.5-Omni utilizes the Thinker-Talker architecture, comprising:

- Thinker: A Transformer decoder that acts as the brain, processing text, audio, and video modalities and generating high-level representations and corresponding text. It includes encoders for audio and images to facilitate information extraction.

- Talker: A dual-track autoregressive Transformer Decoder that functions like a human mouth, receiving high-dimensional representations from the Thinker and outputting discrete tokens of speech fluidly. The Talker receives all historical context information from the Thinker. This cohesive single model design enables end-to-end training and inference.

Performance Evaluation

Qwen2.5-Omni underwent comprehensive evaluation, showcasing strong performance across all modalities compared to similarly sized single-modality and closed-source models, including Qwen2.5-VL-7B, Qwen2-Audio, and Gemini-1.5-pro. In tasks requiring the integration of multiple modalities, such as OmniBench, it achieves state-of-the-art performance. Furthermore, in single-modality tasks, it excels in areas including speech recognition (Common Voice), translation (CoVoST2), audio understanding (MMAU), image reasoning (MMMU, MMStar), video understanding (MVBench), and speech generation (Seed-tts-eval and subjective naturalness).

Future Directions

The Qwen team plans to improve the model’s ability to follow voice commands and enhance audio-visual collaborative understanding. Future work will also focus on integrating more modalities to create an even more versatile omni-model. The team is eager to hear user feedback and see the innovative applications developed with Qwen2.5-Omni.

Conclusion

Qwen2.5-Omni represents a significant leap forward in multimodal AI, offering real-time processing of text, images, audio, and video with natural speech output. Its innovative Thinker-Talker architecture, strong performance across modalities, and real-time capabilities position it as a powerful tool for creating more natural and intuitive human-computer interactions. The model’s open availability fosters further innovation and development within the AI community.

Key Takeaways

- Qwen2.5-Omni is a new flagship end-to-end multimodal model from the Qwen series.

- It features a novel Thinker-Talker architecture for processing text, images, audio, and video in real-time.

- The model generates both text and natural speech responses.

- It incorporates TMRoPE (Time-aligned Multimodal RoPE) for improved audiovisual synchronization.

- Qwen2.5-Omni demonstrates state-of-the-art performance across multiple modalities and tasks.

- The model is designed for real-time voice and video chat applications.

- It showcases excellent performance in end-to-end speech instruction following.

- Qwen2.5-Omni is openly available on various platforms, including Hugging Face and ModelScope.

Links

Announcement: Qwen2.5 Omni: See, Hear, Talk, Write, Do It All!