Google has announced significant advancements in its Gemini AI models, focusing on enhanced multimodal capabilities, improved reasoning, and expanded developer tools. These updates include the launch of experimental models like Gemini 2.0 Flash and Gemma-3-27B-IT, alongside new API features that enhance usability across various domains.

Gemini 2.5 Model Enhancements

- Gemini 2.0 Flash Model: A new experimental model capable of native image generation and editing was launched on March 12, 2025. This feature allows developers to create and refine visuals directly within the Gemini ecosystem, addressing use cases like multimedia content creation and interactive design workflows.

- Gemma-3-27B-IT: Released as part of the Gemma 3 series, this model specializes in intelligent task handling across text-based and multimodal applications. It is available via Google AI Studio and the Gemini API.

- Embedding Models: The Gemini-embedding-exp-03-07 model was introduced earlier this month, providing enhanced embeddings for tasks such as semantic search and recommendation systems.

API and SDK Updates

- YouTube Integration: Developers can now use YouTube URLs as a media source for tasks like video analysis and summarization. Inline video support (up to 20MB) has also been added.

- Search Tool Integration: Gemini models now support Search as a tool within the API, enabling real-time data retrieval for complex queries.

- SDK Releases: The Google Gen AI SDK for TypeScript and JavaScript was made available for public preview on March 11, expanding accessibility for developers working with web-based applications.

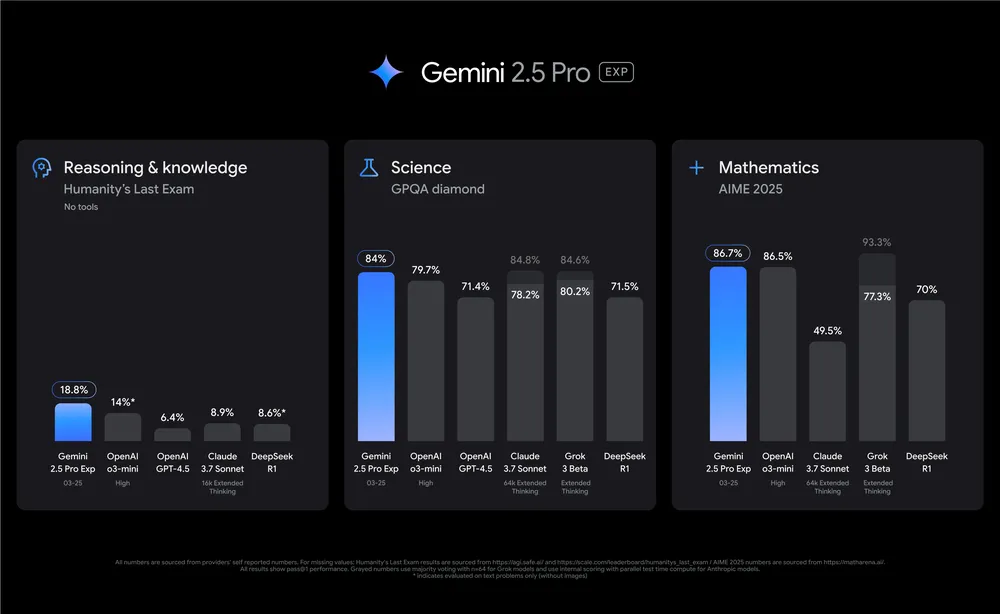

Gemini 2.5 Performance Improvements

The updates emphasize cost efficiency and speed:

- Gemini 2.0 Flash-Lite GA Version: Optimized for speed and scalability, this version reduces computational overhead while maintaining high performance.

- Decoding Speed: Models like TurboS achieve twice the decoding speed under similar deployment conditions.

- Expanded Context Windows: Models can now process up to 1 million tokens consistently, supporting long-context tasks such as analyzing large datasets or generating detailed reports.

Gemini 2.5 Use Cases

The updates open new possibilities across industries:

- Multimedia Content Creation: Image generation/editing capabilities enable developers to create dynamic visuals for marketing and design purposes.

- Semantic Analysis: Embedding models improve recommendation systems and search engines.

- Video Summarization: YouTube integration allows efficient extraction of insights from video content.

- Enterprise Applications: Enhanced multimodal reasoning supports complex workflows in fields like finance, healthcare, and education.

Conclusion

Google’s March 2025 updates to its Gemini models mark a significant leap in AI capabilities, particularly in multimodal reasoning and developer accessibility. The introduction of experimental features like native image generation reflects Google’s commitment to pushing boundaries in AI innovation while addressing real-world challenges. With expanded tools, faster processing speeds, and broader context windows, Gemini continues to set benchmarks for productivity-focused AI applications.

Key Takeaways

- Gemini 2.0 Flash introduces native image generation, enabling visual content creation directly within the AI ecosystem.

- Gemma-3-27B-IT enhances intelligent task handling, supporting diverse applications across text-based and multimodal domains.

- API updates improve usability, including YouTube integration for video analysis and search tool support for real-time queries.

- SDK releases expand developer access, with TypeScript/JavaScript tools now available in public preview.

- Performance improvements include faster decoding speeds, optimized scalability, and expanded context windows (up to 1 million tokens).

- Use cases span multimedia creation, semantic analysis, enterprise workflows, and video summarization.

Links

Official: Deepmind gemini

Announcement: Gemini 2.5: Our most intelligent AI model

Demo live: Gemini-2.5-pro