What is Google Gemma 3?

Google Gemma 3 – the latest generation of open models from Google, was built upon the success of previous Gemma models. Gemma has already achieved significant impact with over 100 million downloads and a vibrant community creating over 60,000 variants. Gemma 3 is designed for speed and efficiency, capable of running on workstations, laptops, and even smartphones, enabling developers to build and deploy responsible AI applications at scale.

Features of Google Gemma 3

- Gemma 3 is the latest generation of open models from Google, designed for speed, efficiency, and versatility.

- It includes models ranging from 1 billion to 27 billion parameters, with a focus on accessibility for resource-constrained devices.

- Gemma 3 supports over 140 languages and handles multimodal inputs (text, images, videos) for creating interactive AI experiences.

- The increased context window of 128,000 tokens allows for processing vast amounts of information and generating more coherent responses.

- It offers improved function calling and structured output for building intelligent agents.

- Gemma 3 is designed for easy fine-tuning and deployment across various platforms and frameworks.

Why Gemma 3 Stands Out

Google Gemma 3 is a family of models ranging in size from 1 billion to 27 billion parameters. Addressing community feedback, Gemma 3 includes a smaller, more accessible 1 billion parameter version, opening possibilities for running AI on resource-constrained devices. This flexibility allows developers to choose the perfect size model for their projects, whether it’s a lightweight model for mobile or a large-scale service for handling complex documents.

Gemma 3 is built from the same research and technology that powers Google’s Gemini 2.0 models. It excels at a wide range of language tasks and supports over 140 languages, enabling applications to connect with a global audience. Gemma 3 can handle multimodal inputs, including text, images, and videos, allowing for interactive and intelligent experiences. It can manage complex multimodal conversations, tackle challenging math and coding problems, and more, all within the same model.

A significant enhancement in Gemma 3 is the increased context window of 128,000 tokens, allowing the model to process vast amounts of information and generate more coherent and insightful responses. For building intelligent agents, Gemma 3 offers improved function calling and structured output, making it easier to integrate with other tools and services.

Like previous generations, Gemma 3 is designed for fine-tuning, allowing developers to adapt it to specific needs, specialize it for particular industries, improve its performance in certain languages, or tailor its output style. Gemma 3 can be fine-tuned on Google Colab, Vertex AI, or on a user’s own GPU. Deployment is simplified with support from popular frameworks like Transformers, Jax, Keras, and more. Google has also partnered with industry leaders like NVIDIA, Hugging Face, and AMD to ensure speed, efficiency, and seamless integration.

Applications for Developers

Gemma 3 is readily accessible through Google Studio, the Google AI SDK, and platforms like Kaggle, Vertex AI, and Hugging Face. It can even be downloaded and run locally. The Gemmaverse showcases applications built by the Gemma community.

Google Gemma 3 Models

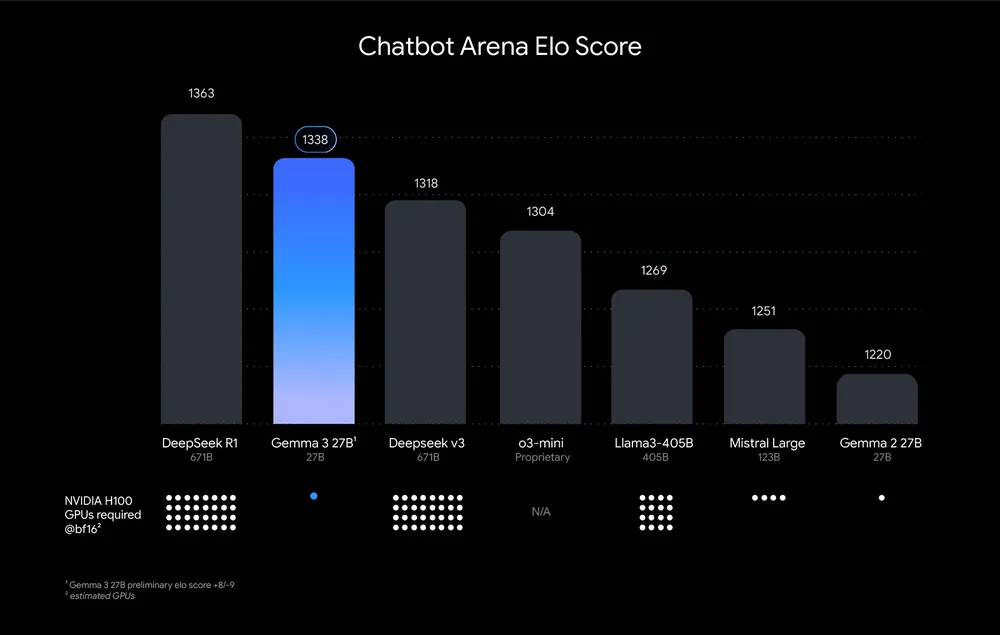

Chatbot Arena ELO Score

Links

Official: Introducing Gemma 3: The Developer Guide – Google Developers Blog

Demo: Gemma 3 27b